Never out of style: Engineering principles and the Coin Game, pt.2

A running series on principles that don't seem to go out of style. This time: Diving deeper on the Coin Game and showing there's more than just Lean principles as play!

Last time in this series, I explained one of my favorite activities during the beginning of a training: The Coin Game (see this link for full details).

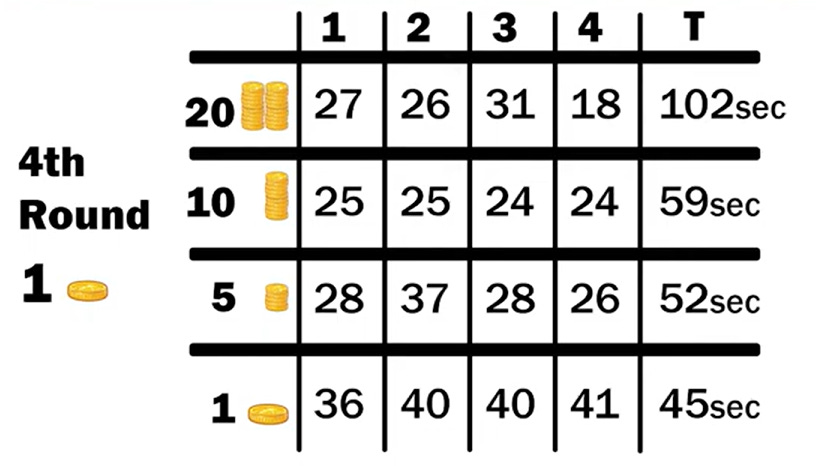

This game is played in a few rounds, where participants simulate the pushing of ‘new changes’ through a different batch size per round from large (20 coins) to single-piece flow (a batch size of 1).

The most obvious observation from this game is that reducing the batch size leads to a reduction in overall time spent waiting for the customer as well.

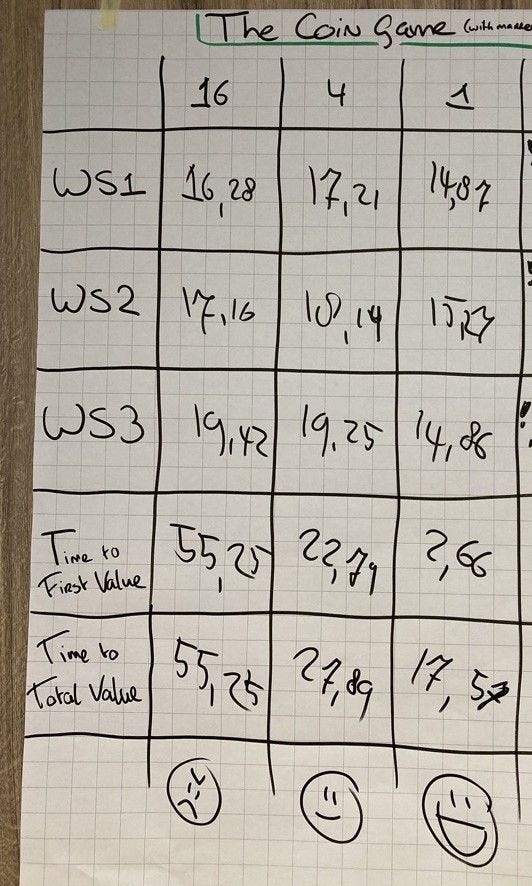

Let’s see some measurements from another example:

The result is obvious like in pt. 1: Reducing our batch size from 16 - 4 - 1 each time leads to a significant reduction, almost halving each time, of the time to ‘Total Value’ reaching our customer. For the same total batch of work, mind you! Brilliantly showing the principle of ‘reducing batch sizes’ in real life.

How this would translate to the ‘real world’ is something I shortly alluded to last time, so let’s dive a little deeper now.

In most ‘legacy’ or enterprise-y ways of working, it’s common for development teams to bunch up their changes and release them in a big batch, for a myriad of reasons. Sometimes once per month, (half)year, or year in worst case scenario’s. This leads to our ‘20-coin-batch’ situation: Long lead times, and all value hitting the customer at once so little way of intervening if 1 coin of the batch turns out to be faulty.

Ideally we try to split up these big bang releases using tech like pipelines to smoothen out automated deployments, add automated tests of various types (unit, integration, system, API, E2E in approx. that order) onto them to help prevent regressions and make changes easier for devs, such that deploying & releasing smaller units of work at a time becomes easier. Why?

One of the key reasons that teams tend to bunch up changes is because of the manual overhead associated with releasing. Think testing (by hand), filling in and executing runbooks, getting approvals and the like. All of these can ‘hurt’ or be experienced as unpleasant or time-consuming, thus leading teams to doing them less often.

So automating (parts of) our work and building quality in via automated testing is key in reducing our batch size, though it remains a human decision to reduce it in the first place.

Additionally, techniques like ‘feature flags’ or ‘feature toggles’ can help further reduce the risk or pain associated with releasing; these are toggle-able ‘buttons’ (or if-else statements in their simplest form) that can help steer whether or not new code is executed in an environment. This can help decouple deployments (technically installing new code on a server/env) from releases (make new functionality available to customers), and provides a way of quickly testing and reverting (if necessary) new changes to your product. This added focus on stability and risk reduction is often key in getting people outside of the development team along in reducing batch sizes.

There’s a few more techniques that can help here, but I’ll save those for another time. There’s something else at play during the Coin Game that I’d like to highlight.

Let’s revisit the results I shared from one of my own trainings in pt.1 of this series. Notice the two values on the bottom two rows:

‘Time to First Value’ (i.e. the first batch of coins has reached the customer)

‘Time to Total Value’ (i.e. the last batch of coins have reached the customer)

Why is this important?

In the 16-batch example, these two times are exactly the same. That makes sense, as there’s only 1 big batch: First and last are the same thing. Now ask yourself this: What if there’s a faulty coin in the batch?

Yep, we’d need to recall the entire batch, much like what happens with entire series cars where a fault is found in a few examples of a batch. In software development you’d see this by reverting a big bang release because 1 of the many changes has introduced a regression, and finding that needle in the haystack is more costly or time-consuming than just reverting, fixing, then trying again. At the cost of all other changes that might be more than fine!

Reducing our batch sizes leads to us splitting the delivery of a ‘first’ and ‘total’ value. This is extremely important as it will help reinforce a base Agile value:

The feedback loop of our customer and what they think of our product(s) is perhaps THE most important feedback loop there is. Hence our trying to reduce lead times to the delivery of value!

If our first batch turns out to be faulty, or not what the customer wanted, we can take that feedback and fix whatever’s wrong for the next batch(es) so it doesn’t happen again! Better yet, we can ask the customer whether we’re in the right direction so we sharpen what exactly we do AND don’t need to do: maximize the work not done.

If we remain in the largest possible batch size, we rob ourselves of the chance to have customer collaboration more often, and thus risk building all kinds of stuff that the customer does not want or need. And is that what we want?

Stay tuned for the next and final part of the Coin Game analysis, where I will dive deeper than ‘just’ Lean and Agile. Let me hear what you think and what you’d like to read about after this series?